Strange comparison, I know.

Scripting vs declarative approaches

The aspect I’m looking at is scripting (aka imperative programming) vs declarative approach. In many situations I choose the scripting approach over declarative because the downsides of declarative approach outweigh the benefits in the situations that I have.

Declarative approach downsides

Downsides of Chef, Puppet and other declarative systems? Main downsides are complexity and more external dependencies. These lead to:

- Fragility

- More maintenance

- More setup for anything except for the trivial cases

I can’t stress enough the price of complexity.

Declarative approach advantages

When the imperative approach would mean too much work the declarative approach has the advantage. Think of SQL statements. It would be enormous amounts of work to code them by hand each time. Let’s summarize:

- Concise and meaningful code

- Much work done by small amount of code

Value of tools

I value the tools by TCO.

Example 1: making sure a file has specific content. It could be as simple as echo my_content > my_file in a script or it could be as complex as installing Chef/Puppet/Your-cool-tool-du-jour server and so on…

Example 2: making sure that specific load balancer is set up (AWS ELB). It could be writing a script that uses AWS CLI or using declarative tools such as CloudFormation or Terraform (haven’t used Terraform myself yet). Writing a script to idempotently configure security groups and the load balancer and it’s properties is much more work than echo ... from the previous example.

While the TCO greatly depends on your specific situation, I argue that the tools that reduce larger amounts of work, such as in example 2, are more likely to have better TCO in general than tools from example 1.

“… but Chef can manage AWS too, you know?”

Yes, I know… and I don’t like this solution. I would like to manage AWS from my laptop or from dedicated management machine, not where Chef client runs. Also, (oh no!) I don’t currently use Chef and bringing it just for managing AWS does not seem like a good idea.

Same for managing AWS with Puppet.

Summary

Declarative tools will always bring complexity and it’s a huge minus. The more complex the tool the more work it requires to operate. Make sure the amount of work saved is greater than the amount of work your declarative tool requires to operate.

Opinion: we can do better

I like the scripting solutions for their relative simplicity (when scripts are written professionally). I suggest combined approach. Let’s call it “declarative primitives”.

Imagine a scripting library that provides primitives AwsElb, AwsInstance, AwsSecGroup and such. Using this primitives does not force you to give up the flow control. No dependency graphs. You are still writing a script. Minimal complexity increase over regular scripting.

Such library is under development. Additional advantage of this library is that the whole state will be kept in the tags of the resources. Other solutions have additional state files and I don’t like that.

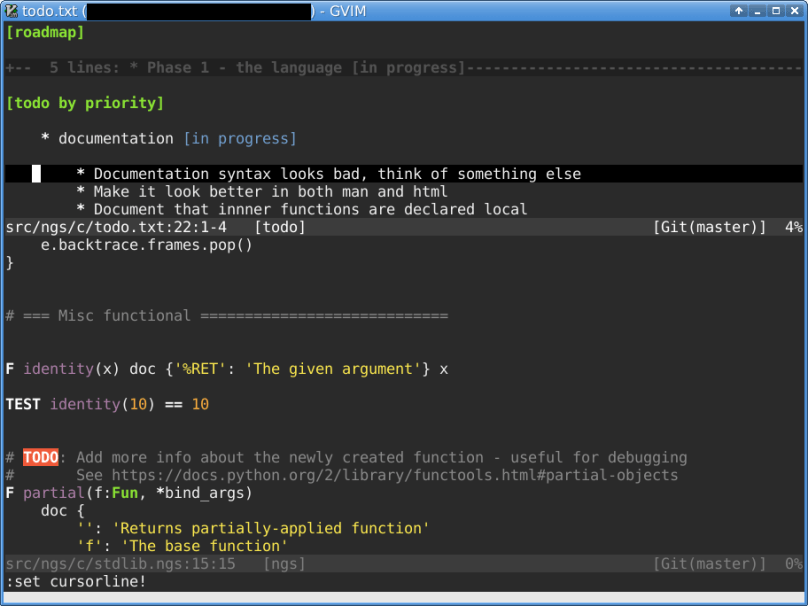

Sample (NGS language) censored code that uses the library follows:

my_vpc_ancor = {'aws:cloudformation:stack-name': 'my-vpc'}

elb = AwsElb(

"${ENV.ENV}-myservice",

{

'tags': %{

env ${ENV.ENV}

role myservice-elb

},

'listeners': [

%{

Protocol TCP

LoadBalancerPort 443

InstanceProtocol TCP

InstancePort 443

}.n()

]

'subnets': AwsSubnet(my_vpc_ancor).expect(2)

'health-check': %{

UnhealthyThreshold 5

Timeout 5

HealthyThreshold 3

Interval 10

Target 'SSL:443'

}.n()

'instances': AwsInstance({'env': ENV.ENV, 'role': 'myservice'}).expect()

}

)

elb.converge()

It creates a load balancer in an already existing VPC (which was created by CloudFormation) and connects existing instances to it. The example is not full as the library is work in progress but it does work.

Have fun and watch your TCO!