( During code review, looking at TypeScript code. )

Colleague: … type casting …

Me: hold a sec, I don’t remember about anything called type casting in TypeScript

Colleague: you know, “as”

Me: yep, there is “as”, I just don’t think it was called type casting when I last re-read the official documentation a couple of months ago.

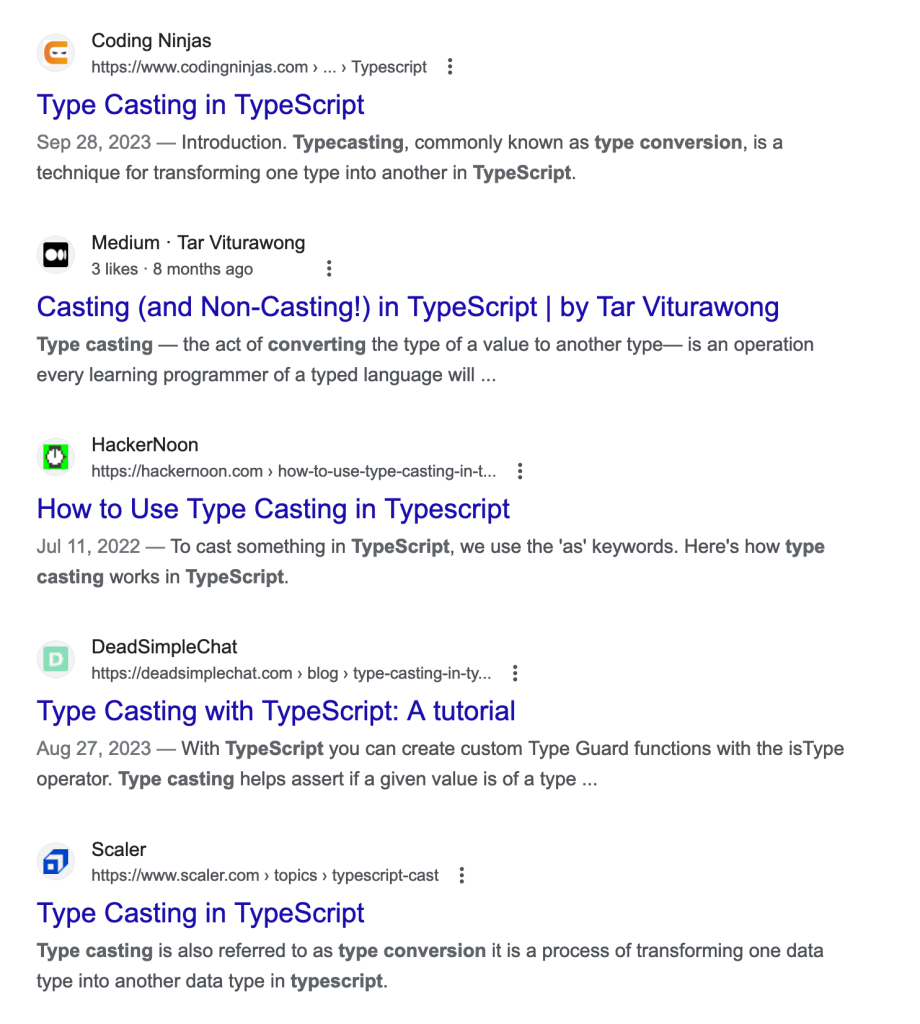

Colleague: hold a sec, here (shows me Google search results)

Search Results

My suspicion that something is off just grew when no result came from the official documentation.

TypeScript’s “as”

Clicked on few of this articles. They are talking about “as”. What does official TypeScript documentation tell us about “as”? It’s found under the heading Type Assertions. I didn’t see anything about type casting in the documentation.

Type Assertion vs Type Casting

Same thing, you are just showing off by being pedantic about naming!

Statistically probable response

What if I told you there is a practical difference between type assertion and type casting which confused my colleague?

Sometimes you will have information about the type of a value that TypeScript can’t know about… Like a type annotation, type assertions are removed by the compiler and won’t affect the runtime behavior of your code.

Official TypeScript documentation

You are letting TS know which type the value is. This information is used at compile time. Nothing happens at runtime. “cast” to the wrong object and no exception is thrown. That’s type assertion in TS.

In other languages casting has runtime implications.

- Java – “A ClassCastException is thrown if a cast is found at run time to be impermissible.”

- C sharp (see below)

Reality Check 1

Googled “type assertion vs casting typescript”. First result is What are the difference between these type assertion or casting methods in TypeScript (Stack Overflow). Oops. People are using “casting” all over, even on Stack Overflow… except that one comment at the end of the page:

(myStr as string) isn’t a cast, it’s a type assertion. So you are telling the compiler that even though it doesn’t know for sure that myStr is a string you know better and it should believe you. It doesn’t actually affect the object itself.

Duncan, Apr 13, 2018 at 14:26

… which is countered by

Coming from C# and since Typescript has many C# features, I’d say it is

user2347763, Apr 13, 2018 at 15:17

I’ll have to disagree on the grounds that cast expression in C# “At run time, … might throw an exception.”

Reality Check 2

… whether the underlying data representation is converted from one representation into another, or a given representation is merely reinterpreted as the representation of another data type …

https://en.wikipedia.org/wiki/Type_conversion

So, apparently, type assertion is a specific case of type casting.

Conclusion

I was wrong thinking that “type assertion” is not “type casting”. Apparently, it is. It’s just a specific case. The phrase “In other languages casting has runtime implications.” (that I wrote above) is apparently not correct in the general sense despite Java and C# popping up immediately.

What I’m still having hard time to understand is why those articles choose to use the generic wording. I think we should always strive for precise wording.

If you want to be part of search results, fine, I get it. What’s preventing you from “In TypeScript, type casting is implemented using type assertions (a special case of type casting) that only have compile time implications” (or something similar) though?

Takeaways

- Always check official documentation (apparently it needs to be said for the 1000th time)

- If you are posting an article, I recommend sticking to the wording in official documentation, especially when it’s more precise.

Can we make this article more precise? Let me know 🙂