Opening Rant

You don’t hear one developer saying “Just use Notepad” to a colleague with argumentation that goes roughly like this:

Why are you using this horrible Visual Studio Code? It has built-in debugger! No!

JetBrains IDEs? No! They do too much! They are so into the code!

Vim? Emacs? Not pure enough! Who needs that stupid syntax highlighting?

Keep text editing pure! Any semantic understanding by the text editor is undesirable, other programs should handle that. You don’t want to complicate the text editor.

Developers are not saying that because user experience and productivity matter. Yet, “Use Dumb Shell” is considered to be an acceptable opinion. Is that so common that people fall on their heads so hard (alternatively, did not give it any thought)? WTF?

The solution (shell) should be as simple as possible but not simpler than possible. Current shells are simpler than required by good user experience. Wrong trade-off. Keeping something simple is important but not more important than the outcomes.

Image is a link to http://www.workcompass.com/

Additional food for thought:

- Why use a car when bicycle is so much simpler?

- Why use electricity when fire is so much simpler?

- Why have water in your house when a wells are so much simpler?

Background

I was doing consulting. The usual suspects: AWS, bash, Python, Puppet, Chef. Got to Terraform later. I had and I am still having subpar experiences with these tools. Anything I wanted to do, was overly burdensome, complicated and full of pitfalls.

Since I can’t attempt to fix everything, I picked the worst offender and started working on the alternative programming language and shell combo. The motivating opinion is that Ops have no good programming language nor adequate shell.

The absence of good programming language for ops was covered in another post. In this post I will cover some of the things that are wrong with the interactive shell.

The Shell

The dominant player is bash. It didn’t change much for decades: you type commands and get a dump of text on your screen. Most of the alternatives are essentially the same in this regard, for decades.

Is this because of the brilliant design? I would ask: which design? This? Quoting:

I wrote quite complex shell scripts and my first suggestion is “don’t”. The reason is that is fairly easy to make a small mistake that hinders your script, or even make it dangerous.

The “Dumb Shell” Approach

In this post I would like to address common thought that I hear from people regarding Next Generation Shell, a new programming language and a shell that I’m working on. Note that other shells which are more advanced than POSIX shells also get this. Quoting @cup from lobste.rs:

Wouldnt it be better to just have a dumb shell, that can call programs to do heavy lifting (read: programming languages). This way you have a “division of labor”. Shell works best for launching executables, and programming languages work best for handling data structures and algorithms.

No, it would not. I refuse to accept under-powered tools.

Dumbness is Fundamental Flaw

The “dumb shell” has no semantic understanding and doesn’t care about programs’ inputs nor outputs. Let’s see how it plays out.

Today, “Understanding” of programs’ inputs is covered by completion. Completion was added because “dumb shell” had horrible user experience. It’s slightly better now when the shell “understands” programs’ input to some degree. To some people completion is a scope creep. I think of it as better user experience and productivity gain.

“Understanding” of programs’ outputs? We are not there yet. It also seems that interacting with objects on the screen is too novel of an idea for the shell. Considering how much time this idea is out there: WTF?

Let’s see how this “dumbness” manifests as bad user experience even at the very basic, “intended” functionality:

Programs’ Output – Size

Do you know of any real world scenario when a human supposed to go over 10K lines on the screen? I mean just sit there and read it. Let me know. I’ve never seen such use case.

The shell is dumb, the shell “does not intervene” in programs’ outputs. Sounds good until you get unlimited number of lines dumped on your screen.

“Should have used less” you think later. Right. What if you forgot? The buffer is now filled with useless output and you can’t see outputs of previous programs. Are you being punished? No, just nobody cared about the UX. Alternatively, “it would be to complicated to implement”.

Programs’ Output – All Mixed

- Want to know what’s on your screen is stdout and what is stderr? Well… you can’t. Your shell is dumb, it doesn’t deal with things like that.

- Want to know from which program the output came from? Nope. Some programs cope with that to some degree by prepending their name to error messages:

ls xxxgives youls: xxx: No such file or directory. What a wonderful strategy! Keep the shell dumb and push the burden to all the programs. - You can’t type because some background job is continuing to dump text on the screen where you are trying to work? Too bad, should have used redirection because guess what … you shell doesn’t handle that either… and you can’t add redirection after the program is running; again not shell’s business.

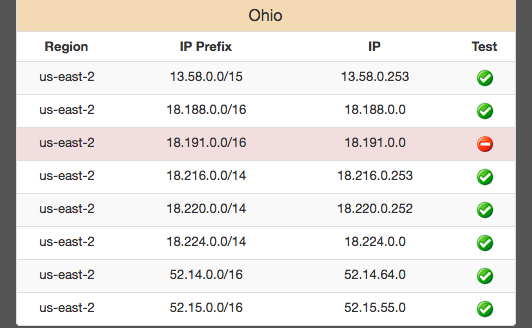

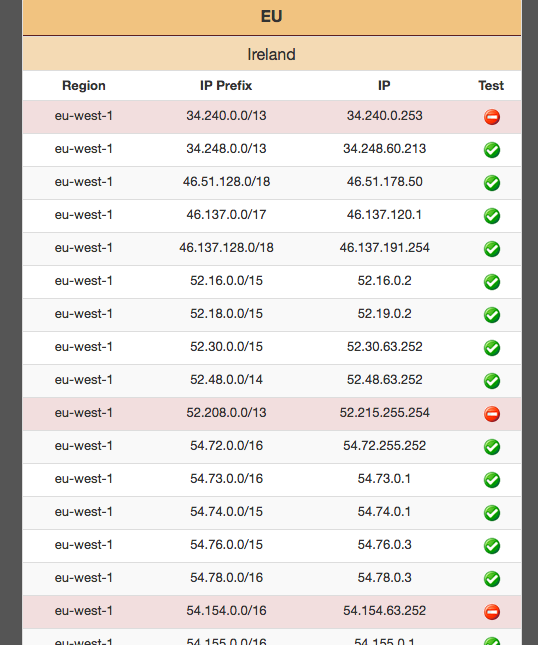

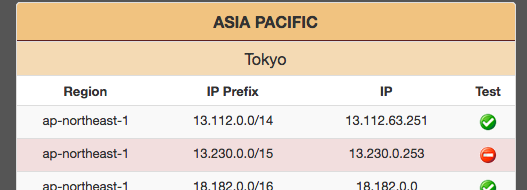

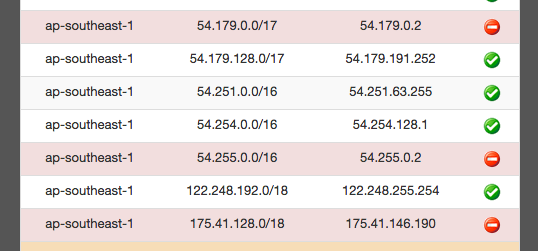

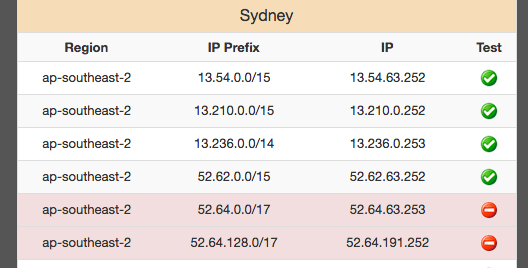

Programs’ Output – Semantic Understanding

You just typed aws ec2 describe instances --filters ... and now you have some output.

You now see on your screen instance you would like to stop. The ID of the instance is right in front of your face. Now you type aws ec2 stop-instances --instance-ids. You would like to append the instance ID that you see on the screen. Nope. Your shell doesn’t do that. Too dumb. Select with the mouse and paste, because f*ck you!

Side note: amazing AWS engineers did not include any human readable output format so you get JSON dumped on your screen (or any other format which is still non-human-compatible).

Let’s imagine for a moment that the command output had some semantic meaning to the shell.

- The shell would display the output as a table.

- The table would be interactive (interactive output, what a heresy!) and one could navigate with arrow keys and have a shortcut for copy/paste the current cell value to the command line (for completion).

- You could interact with the objects in the table with the mouse (very new concept, another heresy for the shell).

- How about instead of typing

aws ec2 stop-instances --instance-idsyou navigate to the correct line, press enter, choose “stop” from the menu and the command is constructed for you?aws ec2 stop-instances --instance-ids i-123...amazing, ha? Well, your shell can’t do that.

Meaning, do you speak it mo***er?

How about after performing operations using the UI you would get as per your choice one of the below snippets which would re-create the operation:

- CLI commands

- CloudFormation tempalte

- Terraform “code”

Solution: UI for the Shell

Suppose I agree for a second, what do you suggest?

https://github.com/ngs-lang/ngs/wiki/UI-Design

I personally don’t see how the described features could be implemented as external programs, keeping the shell “dumb”.

We Can Do Better Today

The reality has changed. What was once amazing is subpar by today’s standards. The world outside of the shell moved forward while the shell stayed almost the same. Brilliant design? Brilliant what?

Let’s move this industry together from the stone age of bash shell to the bronze age of something a bit less subpar – Next Generation Shell.

Closing Rant

Imaginary UNIX people:

We wanted to separate things because they are semantically different so we split the things into stdout and stderr. Well… stderr was is actually for everything that is not stdout.

One bit of metadata (stdout vs stderr) for semantic meaning of the output should be enough for everyone forever. Well… at least it’s simple for us to implement.

Update: discussion on lobste.rs